Assessment + Feedback

Assessment + Feedback

Overview

Assessment + feedback may differ for a Dual Delivery subject. For guidance specific to Dual Delivery, please see BEL+T’s Guidance for Dual Delivery page.

Assessment

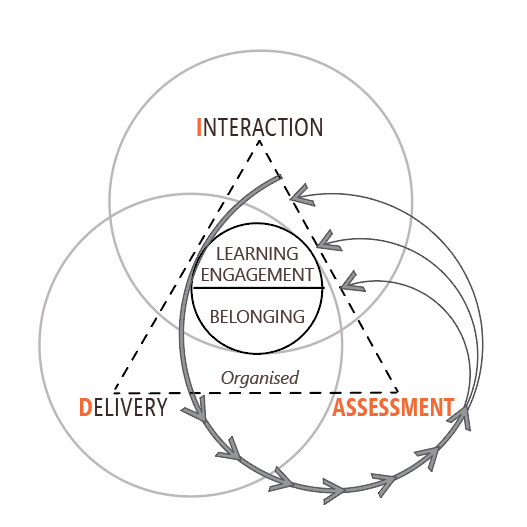

The multiple paths of the Assessment element in the DIAgram indicate the importance of aligned assessment actions in students’ learning experiences. As with the other elements, Assessment is not a stand-alone activity, and in the DIAgram all of these overlap, influence and inform each other. You will find details, examples and tool guidance via the Delivery + Interaction + Assessment links.

This page contains student-centred content organised and curated through a teacher-centred lens to support educators through the cycle of Assessment + Feedback. We define assessment as the search for evidence of learning, which, when designed and delivered effectively, provides valuable evidence to support students in their learning and provides educators with relevant information that can positively direct and guide their teaching practices.

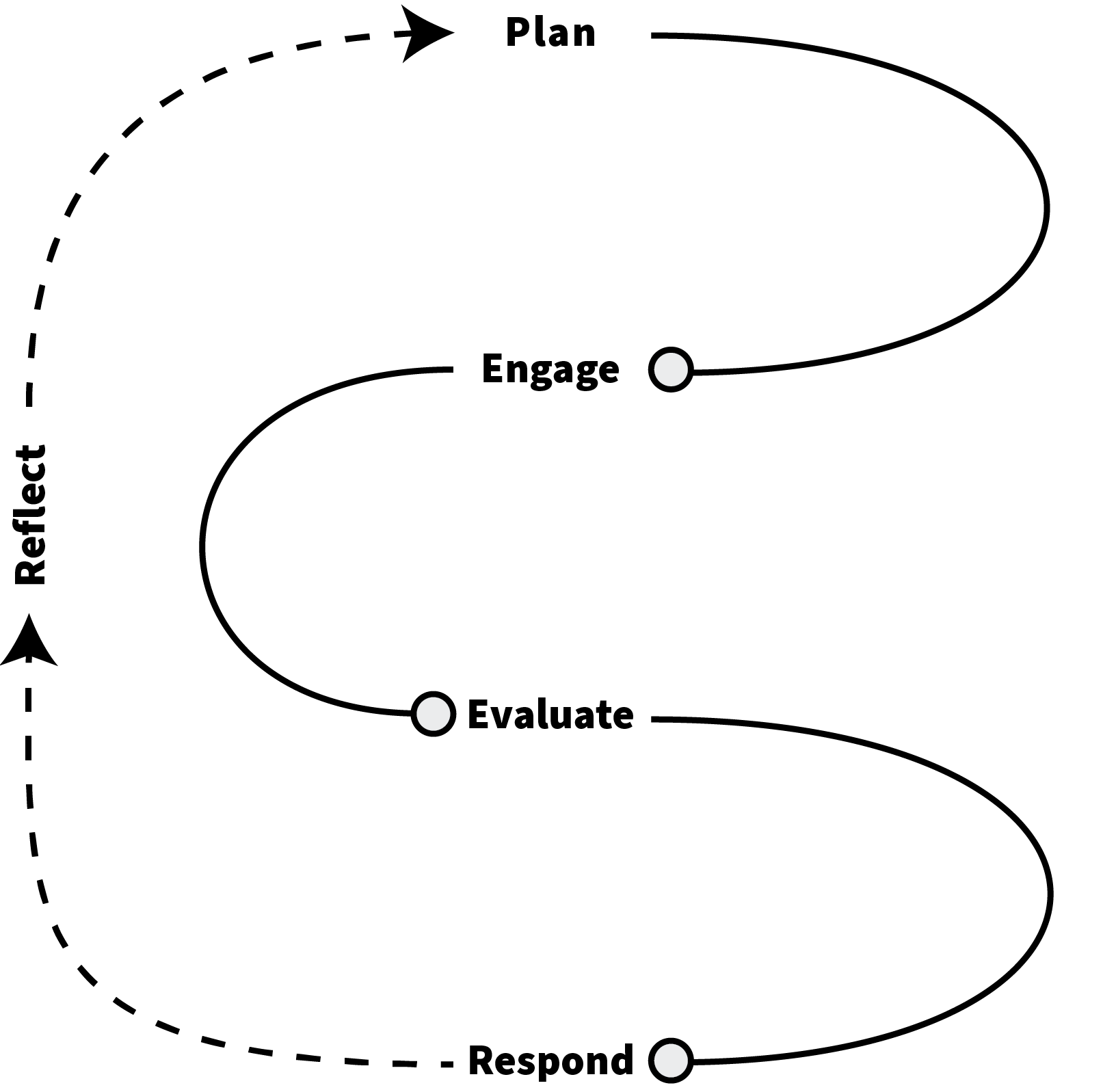

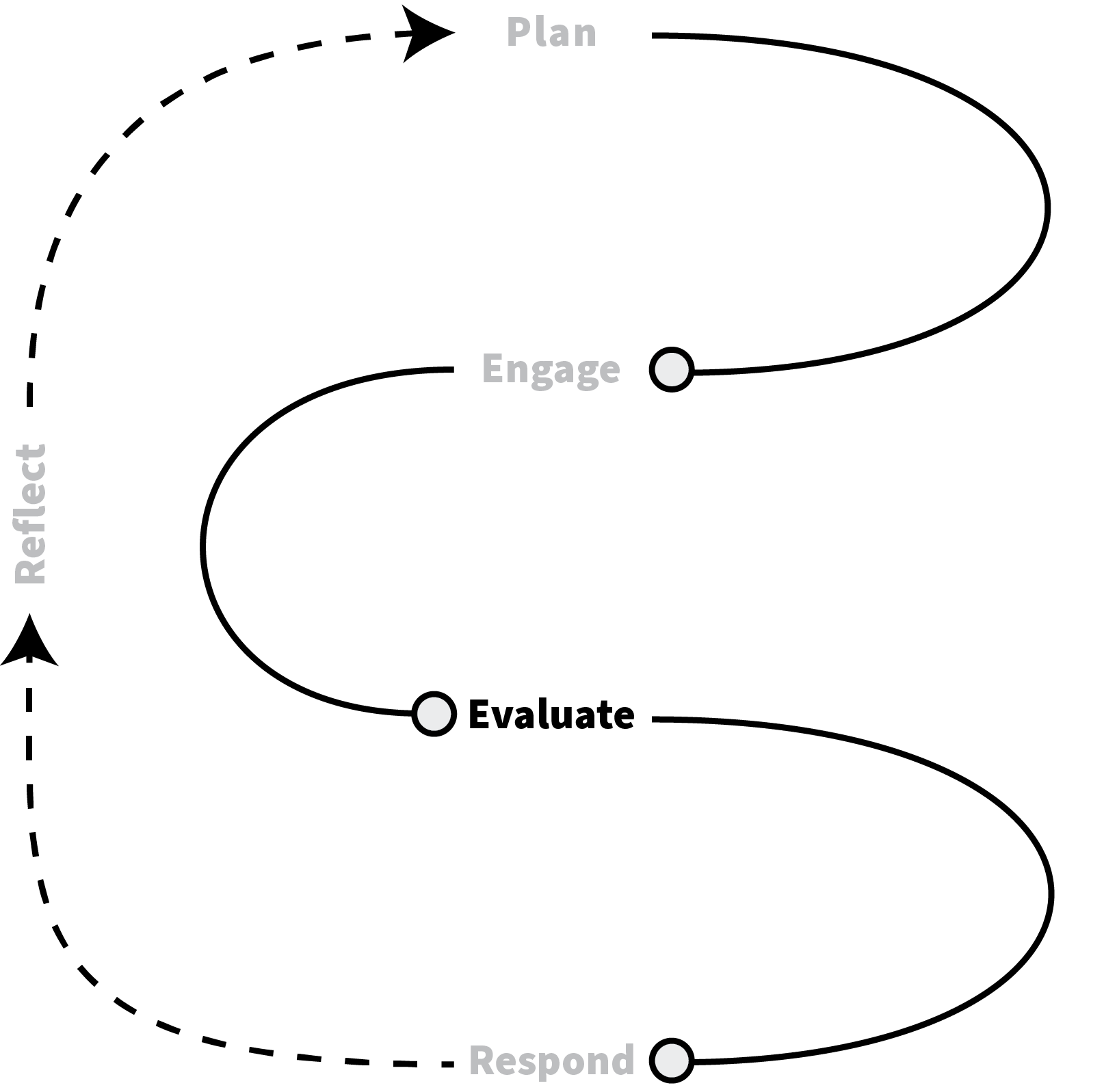

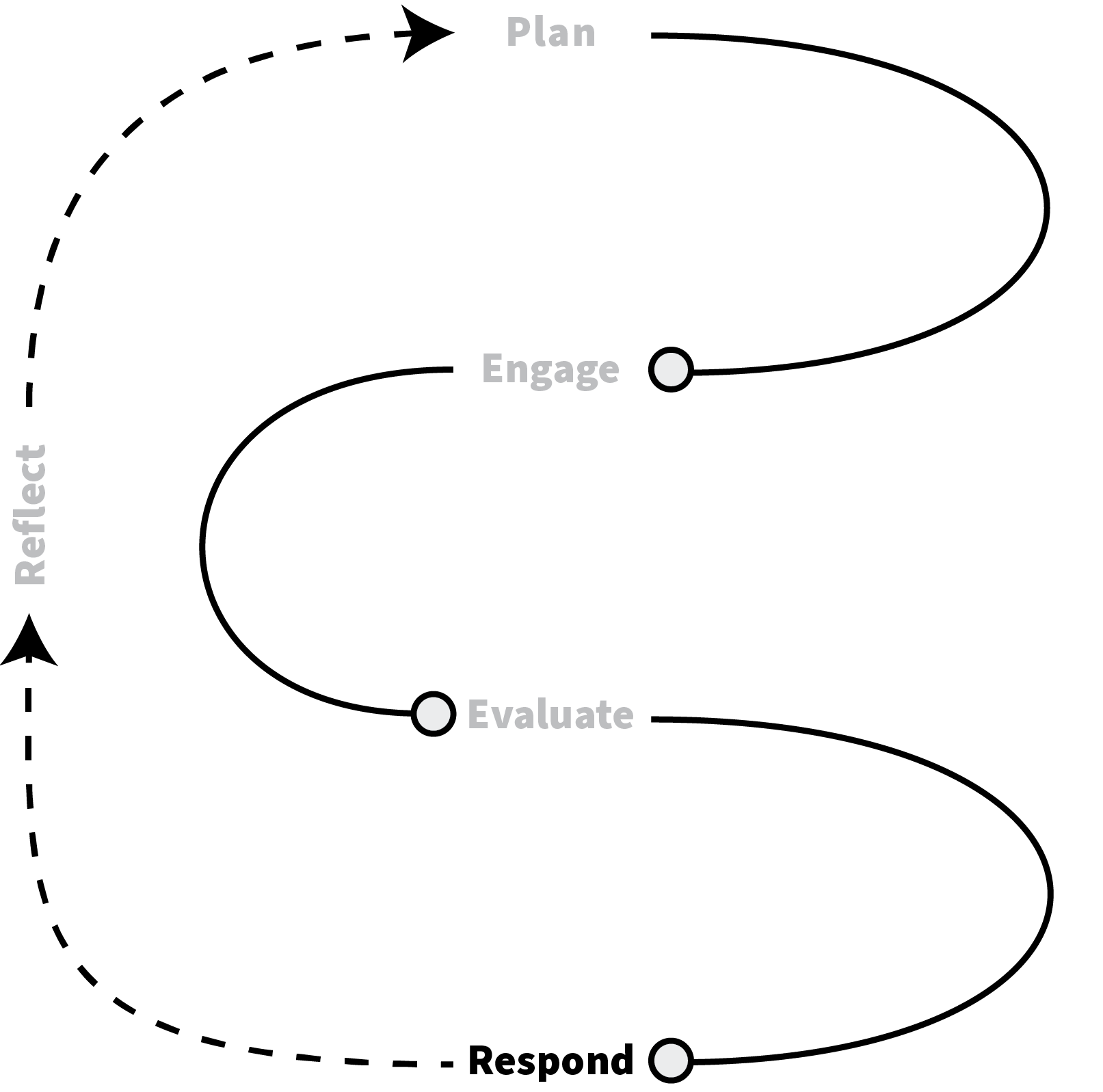

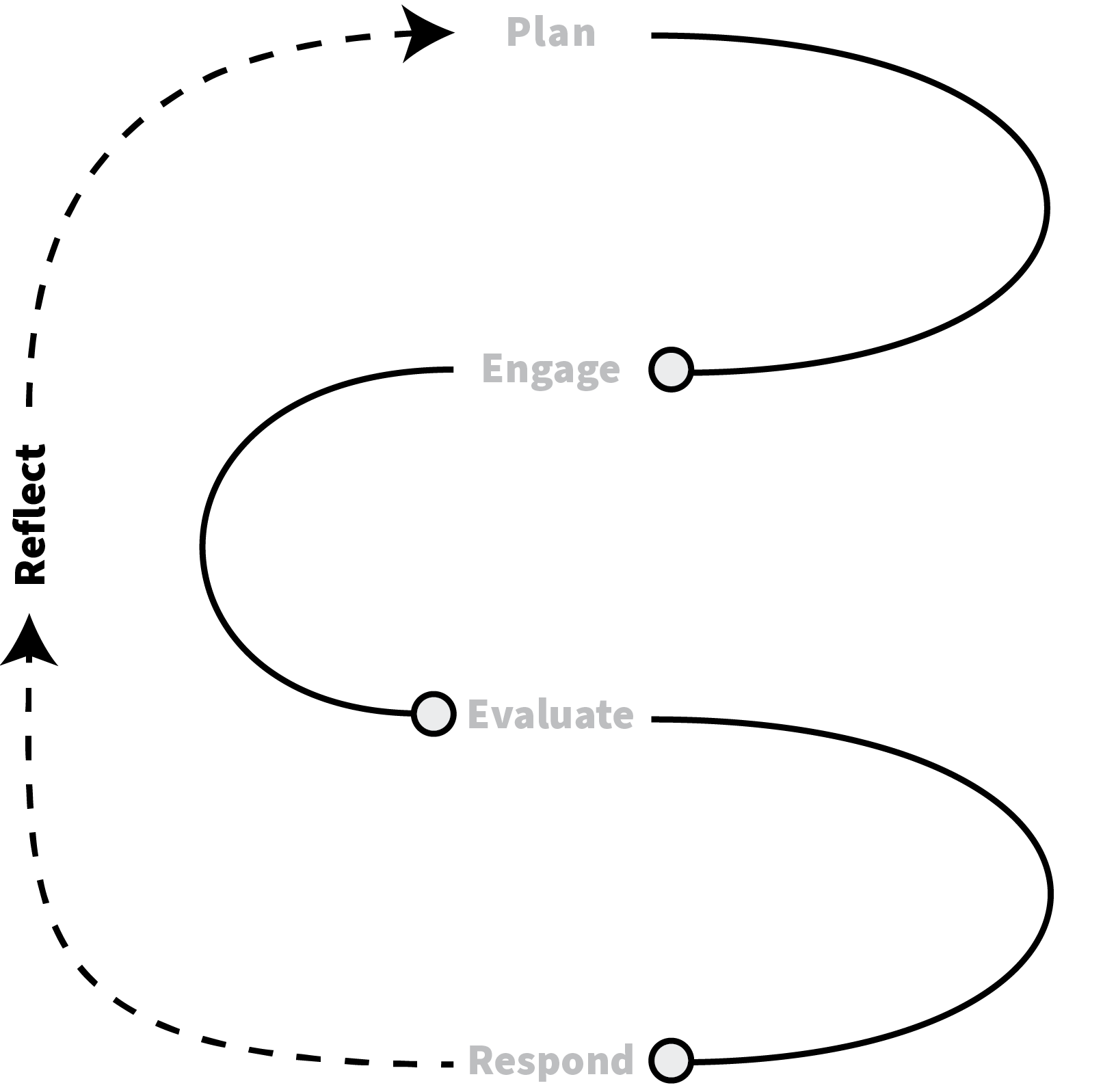

The Assessment + Feedback (A+F) Cycle consists of five stages as illustrated below.

The A+F Cycle illustrates the general process of designing and delivering assessments and feedback, but it should be noted that the implementation of this process may vary depending on the specific context of the instructor and institution. The sequence of the stages should be followed, but the timing of each stage in relation to the teaching period will be different and dependent on the subject's needs.

The following table provides a brief description of each stage of the A+F Cycle:

| Stage | Description | On This Page |

|---|---|---|

| Plan | The first step in the A+F Cycle involves the design of the assessment task. During this process, educators should consider several key factors to ensure student learning is evidenced as accurately as possible. This includes mapping the intended learning outcomes (ILOs) to the assessment task, determining grade weightings and consider comments from past students. | Assessment Design Rubric Design Feedback Literacy |

| Engage | Collecting evidence of student learning is an integral part of the A+F Cycle. This process should take into account factors such as the use of rubrics, the type of assessment (summative or formative) and the mode of assessment. | Use of Rubrics Student Presentations Design Reviews Desk Crits |

| Evaluate | Once the evidence is collected, it's important to analyse and evaluate the data. This includes moderating the data, reviewing requests for extensions and special considerations, and checking submissions to ensure academic integrity. | Assessment Measures and Guidance Formative Assessments Summative Assessments |

| Respond | After students have completed the assessment task, it's important to provide them with feedback on their performance and guidance on how to continue learning. This includes giving them insight into their current level of understanding and providing suggestions for the next steps in their learning process. | Feedback Modes ESS Guidance |

| Reflect | Effective A+F practice involves educators reflecting on the previous four steps, with the goal of identifying opportunities for enhancement and adjustments to be made for future instruction. | Reflective Questions |

Additional factors to consider in supporting student learning through Assessment + Feedback include:

-

Special Consideration

The University's policies and procedures for Special Consideration cover a variety of situations that may impact a student's performance. The University has put forth specific guidance regarding special consideration documentation for those affected by the COVID-19 circumstances.

-

Academic Integrity

Academic integrity is a vital topic when it comes to assessment and feedback in higher education. It is widely acknowledged that with the increased sophistication and easy access to assistive technology (such as AI-powered text/image generators) and increased pressures experienced by students to succeed, more and more learners are rationalising opportunities to gain an academic advantage over their peers (White, 2020). Ensuring academic integrity is essential for maintaining the standards of The University of Melbourne's degrees. To help reduce the risk of academic misconduct in online assessments, coordinators can take the following actions:

- Use high-stakes assessment tasks with caution. Research has shown that cheating is more likely on heavily weighted, high-stakes tasks (Contract Cheating and Assessment Design from Bretag et al, 2019).

- Substitute high-stakes assessment with smaller, scaffolded or linked tasks. This allows students to receive feedback on their progress and for teachers to see examples of student work-in-progress.

- Familiarize yourself with the types of academic misconduct that are commonly detected in ABP subjects. BEL+T has developed Academic Integrity Guidance that defines nine types of academic misconduct, with guidance on how to address suspicions of misconduct.

- Be explicit when explaining the expectations for how students should complete an assessment task. The following paragraph has been reviewed by the ABP Academic Support Office (ASO) as a message to students about collusion in open-book online exams.

- Require students to sign a plagiarism declaration when submitting assessment tasks. Coordinators can either provide students with the following text to include as part of their submission, including through Canvas submissions, or require students to use the ABP Coversheet.

- Use tools like Turnitin Similarity Report, which can be set up in Canvas for text-based assignments, to detect plagiarism. Click here for details.

The online exam on [insert date] is an individual assessment task. Students must complete the task without any input from other individuals. While the exam is open book, students are reminded not to share study notes or summaries of the lecture content. The University of Melbourne takes academic integrity seriously and provides advice here for students about how to avoid academic misconduct. There are serious consequences for students who commit academic misconduct. Penalties can include terminating or suspending the student’s enrolment. Details are provided here.

By submitting work for assessment I hereby declare that I understand the University’s policy on academic integrity and that the work submitted is original and solely my work, and that I have not been assisted by any other person (collusion) apart from where the submitted work is for a designated collaborative task, in which case the individual contributions are indicated. I also declare that I have not used any sources without proper acknowledgment (plagiarism). Where the submitted work is a computer program or code, I further declare that any copied code is declared in comments identifying the source at the start of the program or in a header file, that comments inline identify the start and end of the copied code, and that any modifications to code sources elsewhere are commented upon as to the nature of the modification.

This guidance has been informed by Learning Environments’ advice, UoM policy, a review of relevant scholarship and BEL+T’s ongoing work within the Faculty. For more on assessment-related learning tools, visit our Learning Tools page in the Canvas section of the Teaching Toolbox.

Plan

Effective assessment and feedback requires thorough planning and coordination. When designing or reviewing assessment tasks, educators should consider several key factors to ensure that student learning is evidenced as accurately as possible. This includes, but is not limited to, mapping Intended Learning Outcomes (ILOs) to the assessment task, determining grade weightings and consider comments from past students.

When planning an assessment task, educators should ask themselves the following questions:

- What tasks or activities can students engage in to demonstrate their learning through observable evidence (i.e. what they do, say, make and/or write)?

- How can evidence of students' learning be recorded?

- Does the assessment design allow students to demonstrate learning as outlined by the ILOs?

- What improvements or approaches can be implemented in the assessment design based on student feedback?

- Does the assessment and feedback design offer opportunities for students to engage and develop their feedback literacy?

This page offers guidance to assist educators in creating assessments that effectively demonstrate student learning and suggests ways to use student feedback to enhance assessment design.

BEL+T has produced a set of Tactics for Assessment Related Expectations in alignment with the End of Subject Survey (ESS) questions that relate to assessment and feedback. This guidance presents student commentary, tactics used by subject coordinators and things to consider for their applications.

-

Assessment Design

Assessment design is an important aspect of pre-semester planning. This includes writing/reviewing the ILOs for each subject which are published in the University of Melbourne Handbook. Assessment tasks should be devised as a means for students to evidence the degree to which they have achieved each ILO. Subsequent assessment procedures can thus be seen as the search for this evidence. Through their engagement with these activities, “students develop and demonstrate the ability to judge the quality of their own work and the work of others against agreed standards” (Boud et al, 2009).

When providing submission instructions and details, consider doing the following:

- Cluster instructions into small, manageable chunks (e.g. according to the mode of submission).

- Use checklists to clearly communicate expectations for student submissions.

- Provide clear and explicit instructions on what is expected of students, and by what date and time.

- Consider including a hyperlink to the Canvas submission location.

Ideally, online assessment should consist of several small, low-weighted assessment tasks. This may require restructuring high-stakes assessments into smaller tasks. Here are some tips to keep in mind when revising previous assessment designs:

- Identify the reasons for the adjustments (e.g. changes in access to facilities, materials, or hardware).

- Refer to the subject ILOs.

- Review past assessments and student marks to identify areas that may need revision.

- Consider the online platforms and tools available.

-

Rubric Design

In conjunction with designing assessment tasks to accurately evidence students' learning, there needs to be a means to record this evidence. Rubrics are rules for coding and recording the quality of student work (Griffin, 2018). It is essentially a "short-hand" method of recording what is being evaluated in the students' performance, production, communication, and/or written submission as required by the assessment task. It is important to note that these rules should focus on manifested student behaviour as observable evidence taking the form of what a student can do, make, say or write.

Effective rubrics give clear guidance to both assessors and students by providing a set of descriptions for what should be looked for as evidence of student learning in the assessment task. Furthermore, they provide students with guidance to continue to develop and improve their knowledge and skills. The act of developing a rubric is also a way of making implicit dimensions of assessment explicit to teachers to assist in marking and moderation. This transparency becomes all the more critical in contexts of remote assessment and in design-based tasks (Jones, 2020).

The language used to describe the levels of increasing competence is crucial in providing clear guidance to both assessors and students. Here are some helpful tips to use when beginning the process of designing or reviewing a rubric:

- Use cognitive or action verbs to start the criteria to emphasise the specific student behaviours that are being observed.

- Use verbs that clearly describe the differences between each level of competence.

- Minimise the number of words describing each level of competence

- Select words that will be meaningful to all users (both assessor and students) of the rubric

- Use discipline-specific, academic terms that are familiar to students

- Use clear, non-judgmental and positive language.

- Be consistent, fair and measurable.

Furthermore, collaborating with the teaching team in the design and review process is essential in creating a rubric that is useful for both assessors and students. The teaching team can provide valuable feedback on how to improve the quality of the language for future use, and the collaborative design process promotes peer accountability and ensures a shared understanding of the standards for student work. When collaborating in the rubric design/review process, it is important to keep in mind the following:

- Good quality rubrics should be clear, concise, and free of jargon, making them easily understandable for both assessors and students.

- Rubrics should be phrased using positive, supportive language that emphasises what the student is able to do, rather than using comparative or judgemental language.

- Successful rubrics are developed from a pool of draft criteria - write more than needed and then refine through the process.

- The design of the rubric is an ongoing process. Rubrics should be reviewed and updated regularly with feedback from both assessors and students.

-

Feedback Literacy

Feedback plays a critical role in learning and achievement by providing students with guidance and direction to support their development. As such, it is important to consider the timing, communication method (i.e. written/verbal) and consistency and equity of feedback across members of the teaching team.

Though the content and quality of feedback provided to students is important, it is just as important to support the development of students' feedback literacy. Feedback literacy is a learned skill that enables students to comprehensively engage with feedback by allowing them to:

- Appreciate and understand the value of feedback

- Make judgments about the quality of their own work

- Manage their emotional response to feedback

- Take action in response to feedback (and close the feedback loop)

Educators can foster students' feedback literacy by structuring feedback as a roadmap for their learning journey. Effective feedback should ask and provide answers to these 3 questions:

- Where am I going? (the goals)

- How am I going?

- Where to next?

For further guidance on how to support the development of students' feedback literacy, please check out BEL+T's Feedback Literacy & Practices guide.

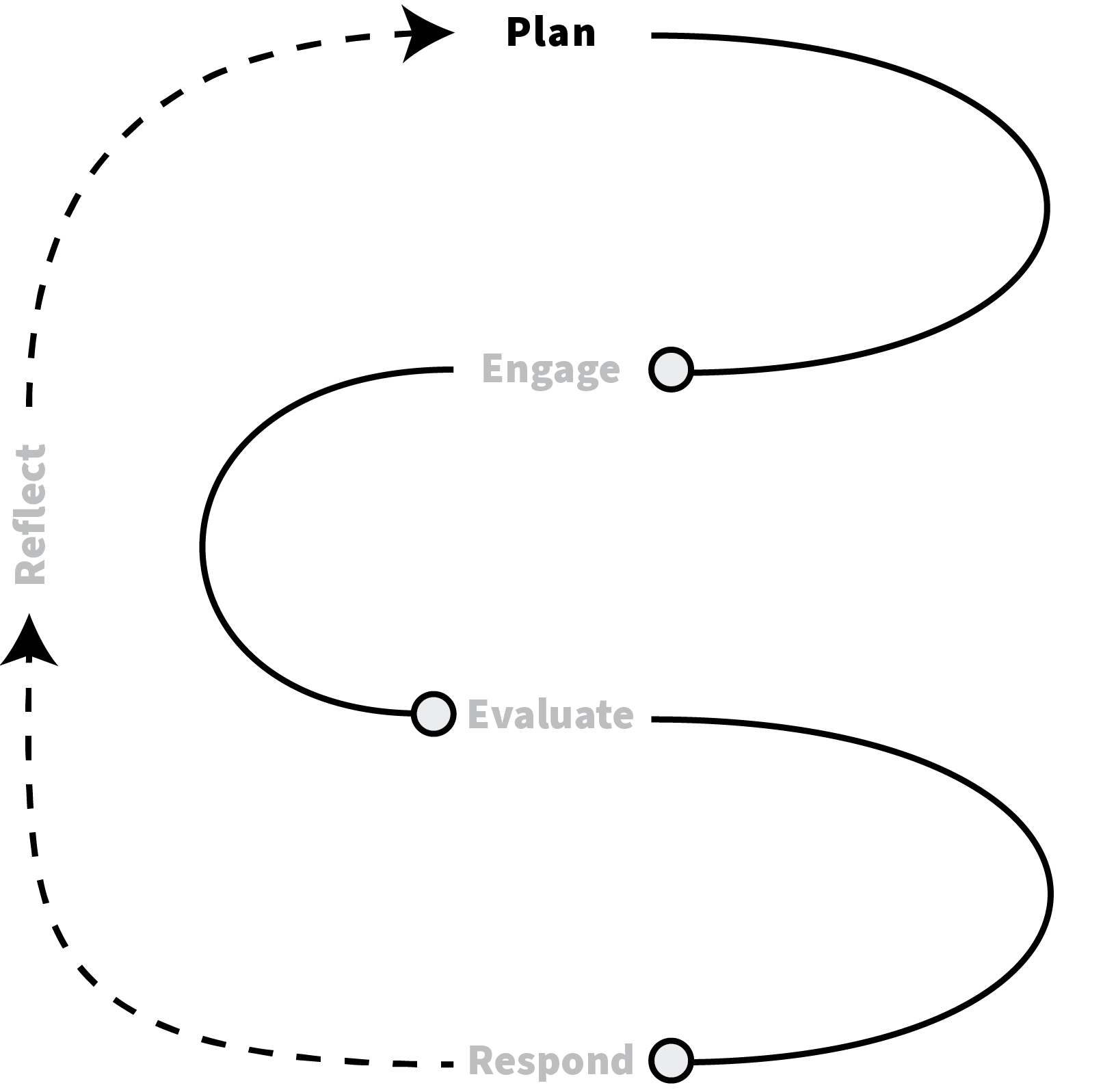

Engage

Assessments are crucial for measuring student learning and evaluating their progress. Properly designed assessment tasks give educators insight into areas where students excel and areas where additional support is needed. This helps educators to adjust the subject content and delivery to better support student learning. Data can be collected in a variety of ways, such as through the use of rubrics, feedback, and markups on drawings.

When designed and implemented successfully, assessment and feedback can enhance student engagement by setting clear learning goals and providing feedback on progress and development. The Engage stage of the A+F cycle includes considerations such as:

- How will observable evidence of learning be measured? (i.e. rubrics)

- What are some key considerations to be aware of when observing studio-based assessments? (i.e. studio presentations, design review, desk crits, etc.)

- How does assessment and feedback contribute to creating a supportive learning environment?

The following content provides guidance and support for subject coordinators and educators who are currently engaging with, or planning to engage with, the Engage stage of the A+F cycle.

-

Use of Rubrics

Rubrics should be given to students at the start of each assessment task as a guide for their learning. They provide qualitative language (ideally using active verbs) for coding and recording evidence of learning and the quality of learning. This helps to clarify the assessment procedures by linking the learning objectives to grade bands and the intention of the assessment task for learning. Ultimately, a good rubric should give students an understanding of where they are currently in terms of their learning and guidance on what they need to focus on to improve and continue developing their knowledge and skills.

Digital rubrics can be created and used through Canvas. Instructions on how to set up Canvas Rubrics for assessments can be found via this link, and guidance on managing rubrics in courses can be found here. Keep in mind that some subjects may require adjustments to the default matrix system in Canvas. In these cases, subjects may use PDF files of assessment rubrics, which tutors can fill out digitally or by filling out printed copies and sharing scanned copies with students. Digital documents can be attached to assessments via Canvas Speedgrader. Note that if grades have been hidden from students, feedback and attachments will not be accessible until grades are published.

-

Student Presentations / Design Reviews

Student presentations and subsequent feedback can both be conducted synchronously, asynchronously or by mixing the two modes. Synchronous interaction can be beneficial for feedback that requires a more conversational approach, while an asynchronous method of allowing students to submit pre-recorded presentations can ease student concerns about public speaking and alleviate potential technical issues.

For asynchronous approaches, you can direct students to specific audio/video screencast software that you may already use yourself for delivery of content and feedback. Be sure to provide clear instructions on how students can upload video files to Echo360 on Canvas and share them with instructors. Teachers can then group uploaded videos in Echo360 and provide any external reviewers with a link.

For synchronous presentations, consider requesting students to submit their slides and scripts as a backup in case of technical difficulties. For further guidance on asynchronous and synchronous presentations and providing feedback on online presentations, visit the Learning Environments' assessment and feedback pages.

Design studio reviews can present unique challenges when conducted in virtual or blended environments. To address this, the following document has been designed to provide studio leaders and/or subject coordinators guidance regarding general good practice in both on campus and online delivery of Design Presentations and Reviews. The document also contains a BEL+T developed a flowchart to aid in the coordination of online design reviews. The flowchart illustrates the various steps and key decisions involved in this process.

Planning Assessment in the Design Studio

After further input from studio tutors, BEL+T developed a flowchart to aid in the coordination of online design reviews. The flowchart illustrates the various steps and key decisions involved in this process.

Coordinating Online Reviews Flowchart

Instead of simply transferring the traditional design review format to an online environment, the ideal approach incorporates the benefits of both asynchronous and synchronous modes of interaction and assessment to enhance learning. This experience may forever change the model for final design reviews for the benefit of all.

-

Desk Crits as Critical Dialogue

One of the most fundamental modes of interaction and assessment in our Faculty, and design-based subjects in particular, is the “desk crit”, in which students and tutors engage in live, informal but critical discussions about ongoing work. This dialogue between tutor and student can be conducted effectively in a physical space (on campus) and in a digital environment (online). Engaging in this informal mode of engagement in either learning environment requires consideration and ideation around the structure of the dialogue content and coordination of how the conversation will occur.

Tutors and students who are new to either form of desk crit may need to learn about the required processes to facilitate this interaction, including:

- what mode of communication the work will be presented as (e.g. physical print out, digital image on an online whiteboard)

- how the feedback will be delivered (e.g. verbal feedback, annotations on works, marked rubrics)

- when this feedback will be delivered (e.g. synchronously during class, asynchronously through digital sticky notes/comments)

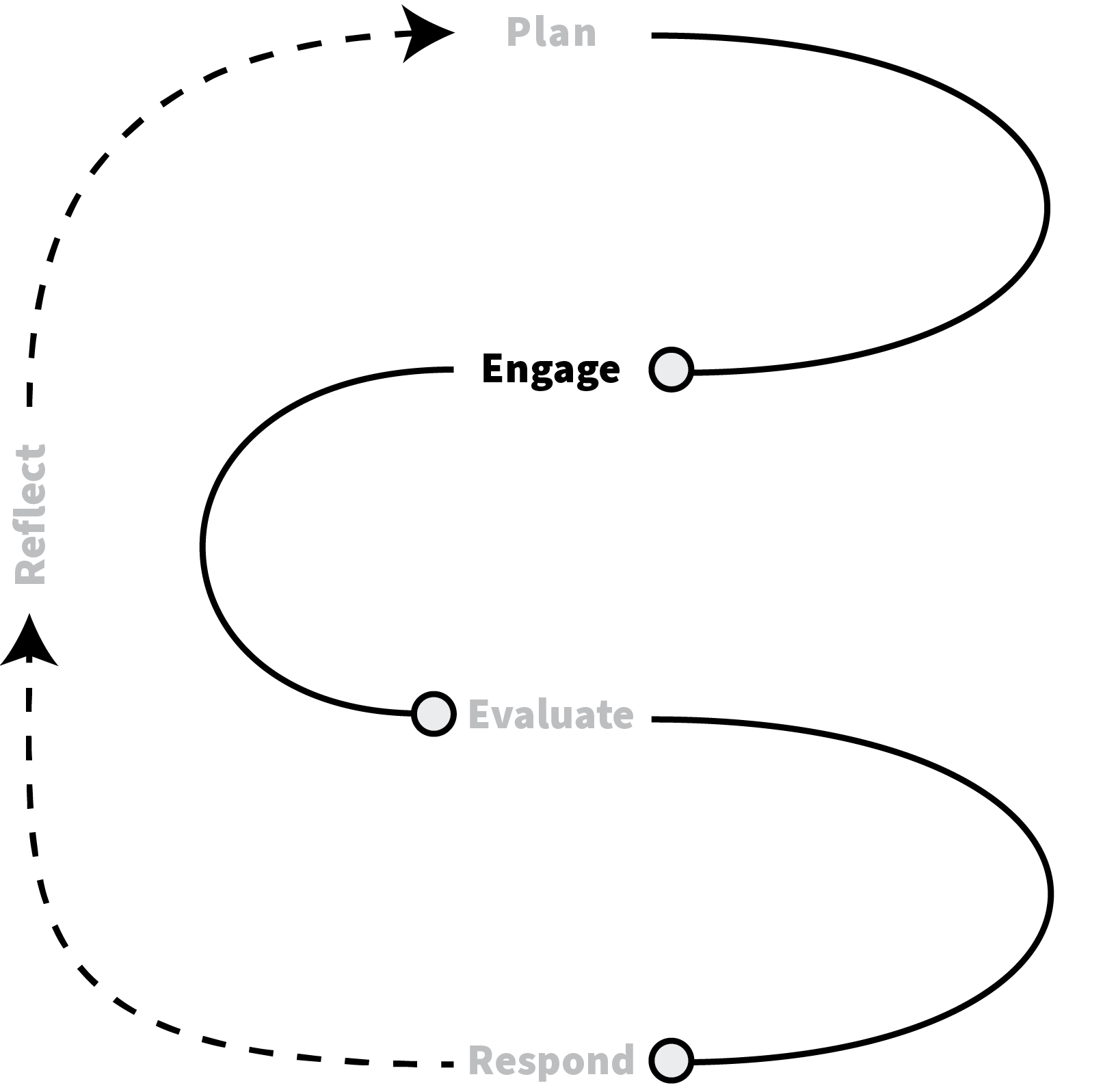

Evaluate

The next step in the Assessment and Feedback Cycle is the analysis and evaluation of the student responses to the assessment task. This process involves reviewing the student work, evaluating their understanding and application of the material, and assessing their performance against the established criteria. It also involves moderation, reviewing extensions and Special Consideration, and appraisal of Academic Integrity. The data gathered from this analysis can be used to identify areas of success and areas for improvement, and inform future teaching strategies and support for the students.

Assessments are commonly classified into two categories: formative and summative, each serving a distinct purpose. Formative assessments are used to support and improve student learning by providing feedback on their progress and understanding of the material. They are used to identify areas of strengths and weaknesses in student learning and to inform the teaching and learning process. On the other hand, summative assessments are used to evaluate student learning and create accountability, ranking and/or accrediting competence by judging student achievement. They are used to determine students' final grades, levels of mastery and to make decisions about student progress. The choice of formative or summative assessment methods is based on the needs and learning objectives of the subject (Schellekens et al. 2021), and will be explored in more detail later in this page.

Assessment Measures and Guidance

-

Use of Rubrics

Rubrics should be given to students at the start of each assessment task as a guide for their learning. They provide qualitative language (ideally using active verbs) for coding and recording evidence of learning and the quality of learning. This helps to clarify the assessment procedures by linking the learning objectives to grade bands and the intention of the assessment task for learning. Ultimately, a good rubric should give students an understanding of where they are currently in terms of their learning and guidance on what they need to focus on to improve and continue developing their knowledge and skills.

Digital rubrics can be created and used through Canvas. Instructions on how to set up Canvas Rubrics for assessments can be found via this link, and guidance on managing rubrics in courses can be found here. Keep in mind that some subjects may require adjustments to the default matrix system in Canvas. In these cases, subjects may use PDF files of assessment rubrics, which tutors can fill out digitally or by filling out printed copies and sharing scanned copies with students. Digital documents can be attached to assessments via Canvas Speedgrader. Note that if grades have been hidden from students, feedback and attachments will not be accessible until grades are published.

-

Moderation

Moderation is one of the most powerful research-based strategies for linking assessment to improved pedagogical practice in education. The moderation process supports the development of a consistent understanding of what learning looks like by examining examples of different types and quality of students' work and evaluating their alignment with formalised criteria and/or standards (e.g. rubrics) (Bloxham, Hughes and Adie, 2016; Adie, Lloyd and Beutel, 2013). This process can either be conducted among educators or between educators and students.

The key purpose of moderation include:

- Equity: ensuring consistency and fairness for students by establishing a common understanding of standards across all assessors.

- Justification: establishing a credible and robust rationale to support the decision-making of what is considered as evidence of learning.

- Accountability: ensuring that all assessors adhere to established assessment marking requirements (e.g. marking protocols, alignment with rubric criteria) and meet desired outcomes (e.g. acceptable grade distribution).

- Community building: providing an opportunity for teachers to collaborate and review standards of what learning looks like. This collaboration allows for the potential to (re)calibrate educators' judgement by sharing interpretations of criteria and standards, which may identify areas for improvement in assessment and rubric design.

When planning for moderation, timing is an important factor to consider. Best practice involves engaging in moderation sessions at all stages of the A+F cycle. This might include:

- An overview during the planning (Plan) of the assessment(s) to ensure all assessment items, such as instructions, marking procedures, rubrics and feedback, are aligned with the intended learning outcomes. It is also an opportunity to ensure the teaching team has a consistent understanding of what is being observed as evidence of learning through student work.

- A review of marking standards during and/or after the marking process (Engage, Evaluate) to ensure the consistency and accuracy of grades and feedback given to students (Respond).

- Reflecting and evaluating processes to identify areas for improvements (Reflect)

Some approaches to moderation include:

- Cross-marking with follow-up meetings for discussion and comparison

- Comparing approaches to feedback (amount provided, tone of language, etc.)

- Assigning sections of an assessment task to individual assessors to evaluate across the cohort rather than marking the whole assessment piece across the team (e.g. one person marks all responses to a specific question or oversees all student presentations on a particular topic).

- Dividing the marking of assessment tasks into sections and assigning each section to a different assessor, rather than having one person mark the entire assessment piece for the entire team. For example, one assessor could evaluate all responses to a specific question for the whole cohort.

- Scheduling moderation meetings where the highest and lowest grades are discussed in order to establish consistency in the marking practices of the teaching team.

Formative Assessments

Formative assessments can be considered as a diagnostic approach to monitoring students learning. Grading of formative assessment tasks provides educators with useful information on how to tailor teaching approaches to individual students' learning needs. This is often evident in desk crits in Design Studios, where tutors provide feedback and guidance in response to students' work-in-progress.

-

Desk Crits as Critical Dialogue

One of the most fundamental modes of interaction and assessment in our Faculty, and design-based subjects in particular, is the “desk crit”, in which students and tutors engage in live, informal but critical discussions about ongoing work. This dialogue between tutor and student can be conducted effectively in a physical space (on campus) and in a digital environment (online). Engaging in this informal mode of engagement in either learning environment requires consideration and ideation around the structure of the dialogue content and coordination of how the conversation will occur.

Tutors and students who are new to either form of desk crit may need to learn about the required processes to facilitate this interaction, including:

- what mode of communication the work will be presented as (e.g. physical print out, digital image on an online whiteboard)

- how the feedback will be delivered (e.g. verbal feedback, annotations on works, marked rubrics)

- when this feedback will be delivered (e.g. synchronously during class, asynchronously through digital sticky notes/comments)

-

Formative Assessment Tools and Guides

The goal of formative assessment is "to monitor student learning to provide ongoing feedback that can be used by instructors to improve their teaching and by students to improve their learning" (Eberly Center, 2020). This makes formative assessment a “low-stakes” endeavour as opposed to “high-stakes” summative assessment tasks like end-of-semester exams. Be sure to visit the Learning Environments page on during-semester digital assessment options for University-wide guidance.

Formative online quizzes are an effective, low-stakes mechanism for gauging student progress. Quizzes can be managed through Canvas. The University also provides full licenses for the Microsoft Office 360 suite, which contains useful e-learning tools for students to use in their assessment tasks. For example, Microsoft Sway is a quick and easy way for students to design blogs or newsletters.

Summative Assessments

Summative assessments can be considered as an evaluative approach to gauge student learning relative to the subjects' goals (e.g. ILOs). Summative assessments are given periodically (often at the end of a topic and/or teaching period) to determine what students know and do not know. It also provides educators with a useful means of evaluating the quality and relevance of subject content, alignment of curriculum, teaching practices that are working/not working, and a general scope of the overall cohorts' performance.

-

Student Presentations / Design Reviews

Student presentations and subsequent feedback can both be conducted synchronously, asynchronously or by mixing the two modes. Synchronous interaction can be beneficial for feedback that requires a more conversational approach, while an asynchronous method of allowing students to submit pre-recorded presentations can ease student concerns about public speaking and alleviate potential technical issues.

For asynchronous approaches, you can direct students to specific audio/video screencast software that you may already use yourself for delivery of content and feedback. Be sure to provide clear instructions on how students can upload video files to Echo360 on Canvas and share them with instructors. Teachers can then group uploaded videos in Echo360 and provide any external reviewers with a link.

For synchronous presentations, consider requesting students to submit their slides and scripts as a backup in case of technical difficulties. For further guidance on asynchronous and synchronous presentations and providing feedback on online presentations, visit the Learning Environments' assessment and feedback pages.

Design studio reviews can present unique challenges when conducted in virtual or blended environments. To address this, the Directors of the MSD and Bachelor of Design, in consultation with BEL+T and Pathway/Program Coordinators, have created guidelines for conducting online reviews.

Planning Assessment in the Design Studio

After further input from studio tutors, BEL+T developed a flowchart to aid in the coordination of online design reviews. The flowchart illustrates the various steps and key decisions involved in this process.

Coordinating Online Reviews Flowchart

Instead of simply transferring the traditional design review format to an online environment, the ideal approach incorporates the benefits of both asynchronous and synchronous modes of interaction and assessment to enhance learning. This experience may forever change the model for final design reviews for the benefit of all.

-

Exams and End-of-Semester Assessments

The Central Exams Unit and Learning Environments will prepare Canvas exam shells for scheduled online exams. Please note that there is a range of support and resources available to assist subject coordinators.

The Learning Environments website offers guides and an overview of different assessment options. Options include LMS Assignments, LMS Quizzes, and external tools (Gradescope and Cadmus).

Please check the live workshops and webinars offered by Learning Environments for targeted sessions. Drop-in sessions are offered to assist with the setup of exams, and pre-recorded webinars are also available.

Additional resources to support digital exams are available on the Digital Assessment website. The Central Exams Unit has also prepared a Guide for Semester 2 Exams – Subject Coordinator (intranet access required).

When writing exams, please be sure to consider copyright issues. The Melbourne Centre for the Study of Higher Education has produced guidance on moving from closed-book to open-book exams. Additionally, use the Exam Content Checklist to confirm that the subject exam is ready.

For any technical inquiries, please submit a request to the Learning Environments team via ServiceNow.

Respond

Once students have demonstrated their learning through engaging with the assessment task they will need a response to guide them on how they are doing. This involves providing feedback on their how they are currently performing along with guidance on what they can do next to continue actively learning.

Feedback is one of the most powerful influences on student achievement, yet the right “Goldilocks formula” of providing the perfect balance of constructive, positive, and meaningful set of responses is very hard to achieve. An integral partner to assessments, feedback is a powerful process loop for learning that provides students with guidance and support on becoming independent confident life-long learners. The following content reviews several modes of feedback that educators can select/design to scaffold student learning and development.

For further guidance, BEL+T has authored a set of Tactics for Feedback in alignment with student responses in the End of Semester Survey (ESS).

-

Feedback Modes

Feedback plays a critical role in guiding learning by identifying areas of development and progress. Good feedback plays a crucial role in guiding learning by identifying areas of development and progress. It allows students to reflect on not just their grades, but also their learning process, enabling them to evaluate their own methods and approaches to learning (Griffin, 2018). Modes and methods of providing meaningful feedback are particularly important in an online learning environment, where opportunities for delivery of informal feedback are limited.

Online Feedback Strategies (Learning Environments)

Written feedback is a useful way of highlighting to students how their work aligns to specific criteria in assessment rubrics. Individual feedback can be tailored and delivered directly through Canvas or Gradescope. Alternatively, general comments can be shared with the entire subject cohort. Such general comments on cohort-wide progress can help frame widespread challenges and achievements, whilst also being an efficient way to deliver feedback (see Poyatos-Matas & Allan, 2005).

Recorded audio/video comments can also provide students with detailed formative feedback in relation to specific criteria. Screencast feedback brings together audio/video with the ability to narrate annotations of student work (VoiceThread, FastStone and OBS Studio are software used by ABP colleagues to record feedback). Often, annotating student work is the most appropriate means of providing directed feedback. Depending on whether the work is written or graphic, there are different cloud-based platforms suited for producing annotations. Perusall is designed for annotating written work, whereas collaborative whiteboard platforms (such as Miro, Mural, and Conceptboard) allow annotations on graphic work. For the latter, a tablet with an accompanying stylus is helpful. Check out this video of Mural in action from Nano Langenheim!

Peer-to-peer feedback can serve multiple purposes: providing alternative perspectives of a student’s work, improving generic skills related to criticism and encouraging interaction between students. Tutors will need to consider the appropriate platform, whilst providing some form of scaffolding and terms of engagement to guide students (e.g. using the ladder model, reflective questions, etc.). Perusall and Feedback Fruits allow for anonymous feedback, which is found to be preferred amongst students (Razi, 2016). For peer-to-peer feedback to be most effective, this form of interaction ideally should be an assessment requirement (i.e. via a reflective journal). ePortfolio in Canvas is an effective platform to facilitate students in developing and curating their journals and provide comments and feedback to each other (Gordon, 2017). Depending on the cohort of students involved, encouraging voluntary participation in peer-to-peer feedback can be challenging. However, students often prefer peer-to-peer feedback to be part of their assessment (Fleischmann, 2019). Self-assessment activities also offer valuable benefits to students (visit the Learning Environments page on self-assessment).

Reflect

With the completion of the A+F cycle, the final step involves taking a step back and reflecting on what has been achieved thus far. Good A+F practice involves educators intentionally reflecting on their process and identifying what has been successful and what could be further improved for the future. Some reflective questions to consider include:

- What do I want the students to learn?

- Does the assessment design afford students the ability to demonstrate their learning?

- Does the design of feedback afford students the ability to receive useful guidance on their learning?

- What else can be done to improve this process? What is working/not working for students?

- How can I better understand what is working/not working for students?

Students will also be reflecting on their own feedback practices by engaging in their feedback literacy.